About Me

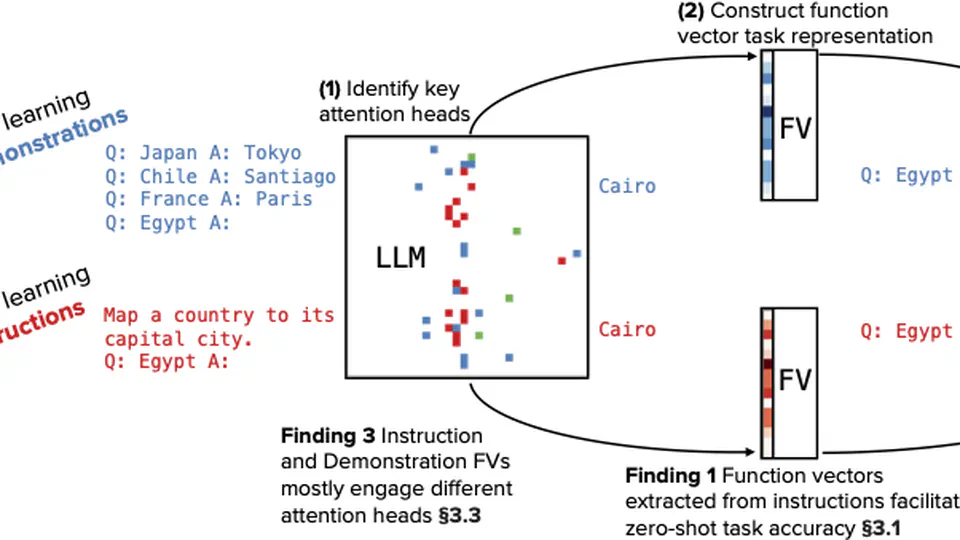

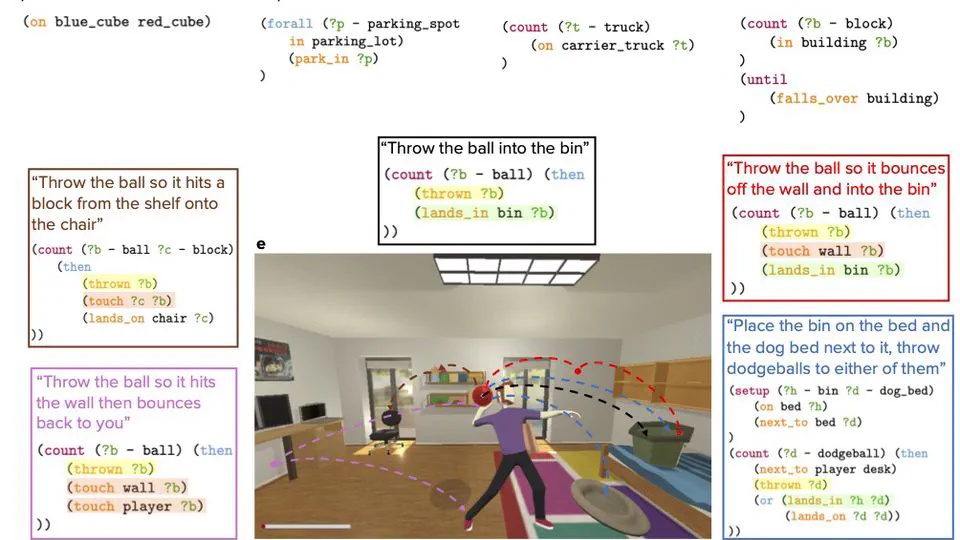

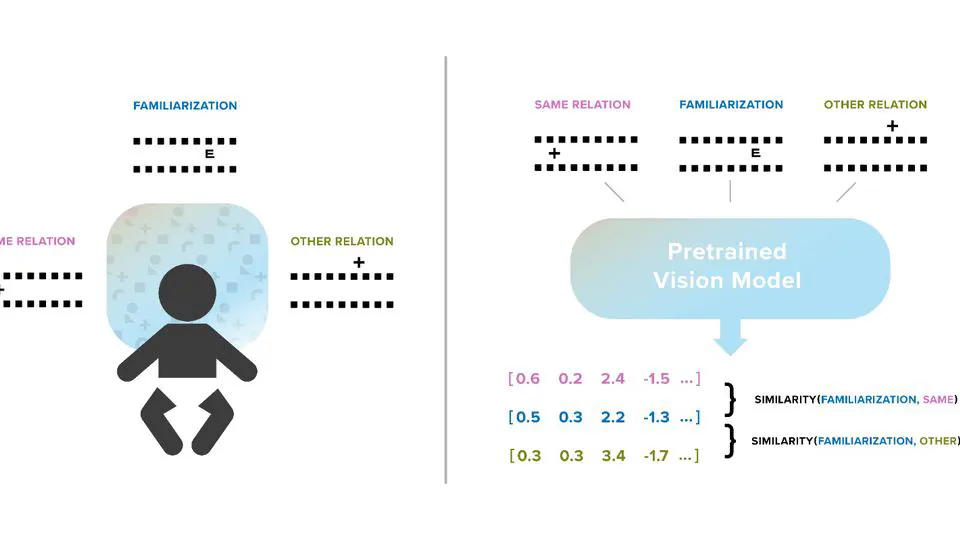

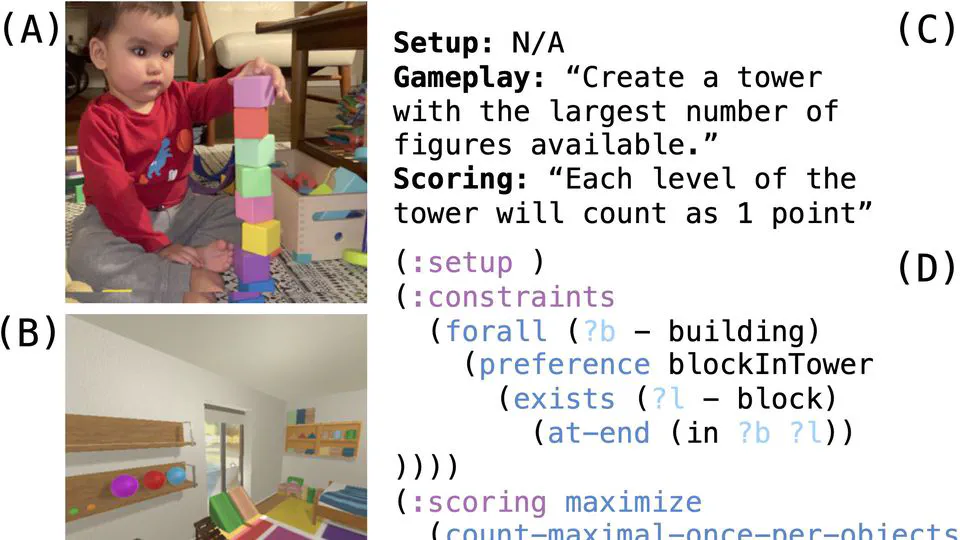

I’m a cognitive scientist and machine learning researcher working as a Research Scientist at FAIR under Meta Superintelligence Labs, where I resesarch topics around evaluating (and improving) theory of mind and other mental modelling capabilities in large language models. Prior to this I did my PhD at the NYU Center for Data Science, advised by Brenden Lake and Todd Gureckis. My dissertation, titled “Goals as Reward Producing Programs”, offers theoretical, empirical, and computational advances in the study of goals: how do we represent, reason about, and come up with them? In parallel, I spent the last year of my Phd working on mechanistic interpretability as a visiting researcher at Meta FAIR with Adina Williams.

In my non-academic life, I live with my wife Sarah and our dog Lila, and spend time making homemade hot sauces, playing board games, and playing ultiamte frisbee (poorly) and lifting weights (slightly less poorly).

- Goal representation and generation

- Human-like goals for agents

- Compuational cognitive science

- Goal/intent inference in LLMs

PhD in Data Science

New York University

MPhil in Data Science

New York University

BSc in Computational Sciences

Minerva University