Do different prompting methods yield a common task representation in language models?

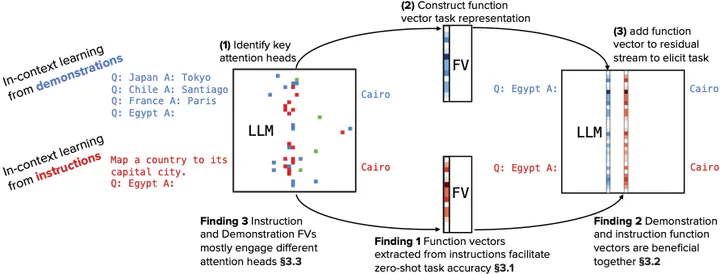

We compare in-context learning task representations formed from demonstrations with those formed from instructions using function vectors (FVs).The process of extracting FVs is shown in Steps (1)-(3). We highlight several findings: We successfully extract FVs from instructions; Instruction FVs offer complementary benefits when applied with demonstration FVs; Different prompting methods yield distinct task representations (highlighted squares on the left LLM are Llama-3.1-8B-Instruct attention heads: those identified by demonstrations only, those identified by instructions only, and shared ones; columns are layers, rows are head indices)

We compare in-context learning task representations formed from demonstrations with those formed from instructions using function vectors (FVs).The process of extracting FVs is shown in Steps (1)-(3). We highlight several findings: We successfully extract FVs from instructions; Instruction FVs offer complementary benefits when applied with demonstration FVs; Different prompting methods yield distinct task representations (highlighted squares on the left LLM are Llama-3.1-8B-Instruct attention heads: those identified by demonstrations only, those identified by instructions only, and shared ones; columns are layers, rows are head indices)Demonstrations and instructions are two primary approaches for prompting language models to perform in-context learning (ICL) tasks. Do identical tasks elicited in different ways result in similar representations of the task? An improved understanding of task representation mechanisms would offer interpretability insights and may aid in steering models. We study this through function vectors, recently proposed as a mechanism to extract few-shot ICL task representations. We generalize function vectors to alternative task presentations, focusing on short textual instruction prompts, and successfully extract instruction function vectors that promote zero-shot task accuracy. We find evidence that demonstration- and instruction-based function vectors leverage different model components, and offer several controls to dissociate their contributions to task performance. Our results suggest that different task presentations do not induce a common task representation but elicit different, partly overlapping mechanisms. Our findings offer principled support to the practice of combining textual instructions and task demonstrations, imply challenges in universally monitoring task inference across presentation forms, and encourage further examinations of LLM task inference mechanisms.

Presented at NeurIPS 2025, preprint and code linked above.